I always enjoy seeing how others have set up their work environments but I realized that I have never shared my own. I’ll do my best to give a list of all the items in the picture as well as what I have tried to achieve.

Philosophically I want a very clean workspace. I find that if my surroundings are neat and clean that I am able to focus more on whatever I am doing whether work or play. This applies particularly to what is in my eyeline as I am at my desk. The rest of the room is generally neat, but if I can’t see it directly, I worry less about it. More specifically this means not having clutter on my desk especially when it comes to wires. My goal is to have as close to zero wires visible from where I sit. I am happy that I have achieved that as much as I think possible at this point.

The Desk

The desktop itself is part of an old desk that was given to me by a dear friend when I got my first apartment over 20 years ago. It is oak veneer over particleboard, I think, but it is very solid and very heavy. I converted it years ago to have metal legs which is why you can see the intentionally exposed ends of carriage bolts at the corners. Those are no longer used but I still like the look. About a year ago, I replaced the legs with adjustable legs from Uplift. They are not the cheapest but the quality is excellent and the support from Uplift is great. I had one leg that started making an odd noise and they sent me a replacement no questions asked.

Yes, there are many computing devices on my desk. Here is the rundown.

Computers

- minas-tirith - Apple MacBook Pro (16-inch, 2023) with an M2 Pro - This is my work computer in a Twelve South BookArc partially behind the right hand monitor.

- hobbiton - Apple MacBook Pro (16-inch, 2021) with an M1 Pro - This is my personal computer.

- minas-ithil - Mac Pro (Late 2013) with the 3 GHz 8-Core Intel Xeon E5 - I always wanted to have one of these and I recently came into one. It is used to run a few applications that I want always on. It also looks pretty on my desk.

- sting - iPhone 14 Pro on a Twelve South Forté. Currently running the iOS 17 beta so I can use Standby Mode to show pictures.

- narsil - 12.9” iPad Pro (5th generation) with an M1 and [Apple Pencil]

Computer Accessories

- 2 LG 4K Monitors (27UK850). They are fine, nothing special about them.

- Amazon Basics dual VESA mount arm. Nothing special here either, it works. I don’t move the monitors around any, so it does an acceptable job holding them in place.

- Caldigit TS4 - This is the center of my system. It is a single Thunderbolt cable to my work MBP and all accessories are plugged into it. Not cheap, but rock solid and solves for my “no visible cables” policy. It connects to both monitors, all the USB accessories, and wired GbE to my home network.

- Caldigit TS3+ - I had this before upgrading to the TS4. I still use it to attach my personal MBP to things.

- Apple Magic Keyboard with Touch ID and Numeric Keypad - I like the dark look and the Touch ID is great for authenticating especially for 1Password.

- Apple Magic Trackpad - I have been known to switch back and forth from a trackpad to a mouse, but I am in trackpad land now.

- Dell Webcam WB7022 - I got this for free at some point and I mostly love it because it reminds me of the old Apple iSight camera.

- Harmon/Kardon Soundsticks - These are the OG version of these from around 2000 that I got with an old G4 Powermac. They still sounds great.

- audio-technica [ATR2100x-USB] microphone - Got this on the recommendation of the fine folks at Six Colors.

- Elgato low profile Wave Mic Arm - I had a boom arm before but I hated having it in front of me. This does a great job of keeping the mic low and out of camera when I am using it.

- Bose QC35 II noise cancelling headphones - I don’t use them at home all that often but they are amazing for travel

- FiiO A1 Digital Amplifier - This is attached to the TS4 audio output and I use it to play music to a pair of Polk bookshelf speakers out of frame.

- Cables - I generally go for either Anker or Monoprice cables where I do need them. I like the various braided cables they offer.

Desk Accessories

- Grovemade Wool Felt Desk Pad - In the winter, my desk gets cold which makes my arms cold. This adds a nice layer that looks nice and absorbs sounds as well.

- Wood cable holder - I didn’t get this exact one but I can’t find where I originally ordered it from. The idea is correct in that it allows you to have charging cables handy but not visible. Really a game changer for having cables nearby but out of sight.

- Field Notes notebooks - I like to keep a physical record of what I work on. There are no better small notebooks than these.

- Studio Neat Mark One pen - I am not a pen geek, but this one is just gorgeous and I love writing with it.

- Zwilling Sorrento Double Wall glass - This is great for cold beverages in humid climates as it won’t sweat all over your desk.

Lighting

- 2 Nanoleaf Smart Bulbs - These are mounted behind my monitors facing the angled wall to provide indirect light

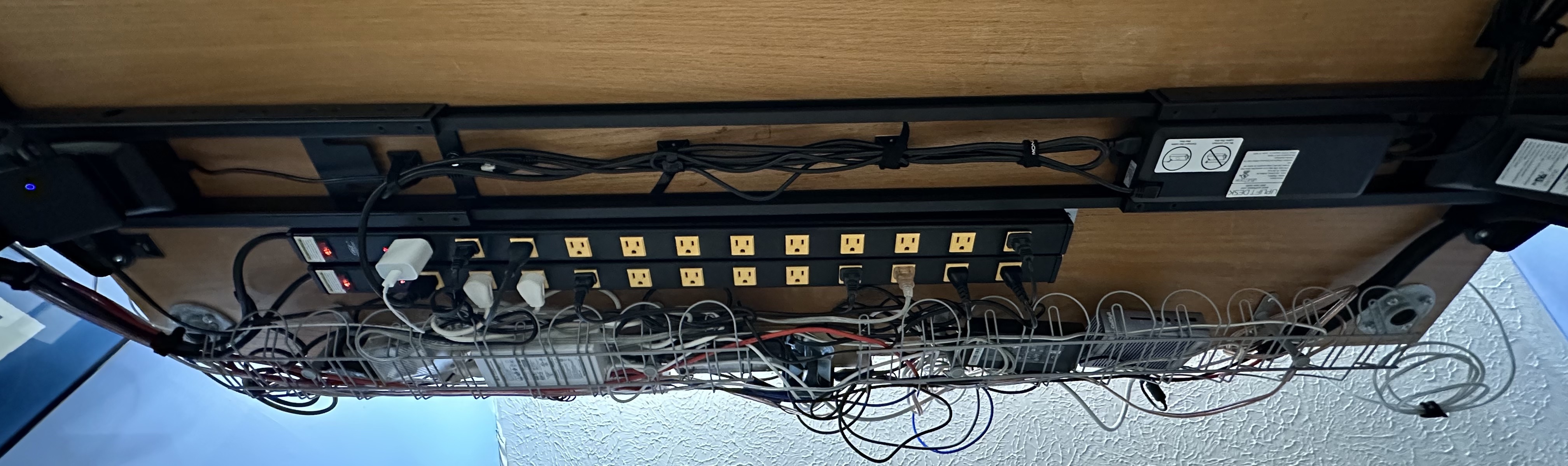

Underneath

I am actually pretty proud of the contained chaos that is under the desk. It is kept in place enough that I don’t hit cables with my legs which is more than I can say for many of my desks in the past.

- Ikea SIGNUM cable organizer trays - These are amazing to mount to the underside of your desk to keep things contained.

- Cord Protector Wire Loom Tubing - This is really nice for keeping cables grouped together especially when you need enough slack for the standing desk

- CyberPower CP1500PFCLCD PFC Sinewave UPS - We get thunderstorms, a UPS is required.

- 2 CRST Heavy Duty Surge Protector Power Strip - These have plenty of outlets and they are spread out far apart so that wall warts don’t bump into each other

- Anker USB C Charger - This powers the cables that are attached to the cord hanger attached to the side of the desk. Nice mix of USB-C and USB-A.

- Monoprice Velcro wire wraps - These are a life saver for organizing cables.