A couple of factors have gotten me back to building things in my spare time. As my work has transitioned back to more management and less coding at work, my desire to do it on my own time has increased. But if I am being honest, the bigger reason is claude. It has done a few things; for bigger projects like the iOS ones below it has allowed me to act more as a product manager than a developer and build things that I have in my head without having to re-learn modern Swift and SwiftUI. But it has also lowered the barrier to entry for turning manual processes into applications and scripts. This is the one I feel is more transformational on a day to day basis. Where before I would have been mildly annoyed doing a manual process, but not annoyed enough to automate it myself, now using the robots I can spend far less time automating it for myself.

So what have I been up to?

iOS Apps

-

Amiko - You may remember Amiko from a long time ago. It is an app I hand built to help keep track of when you last saw your friends and remind you to keep in touch. The app languished over the years without updates and eventually got pulled from the App Store. Rather than trying to update the existing ancient codebase, I started fresh and built a new app from scratch using modern practices. It is almost ready to be released.

-

Fantazio - One of my COVID projects to help Dungeon Master’s run tabletop games using digital maps. It also (are you sensing a trend) languished and while it was still on the store, it was outdated. I was able to update it to run on the latest devices and add some new features.

-

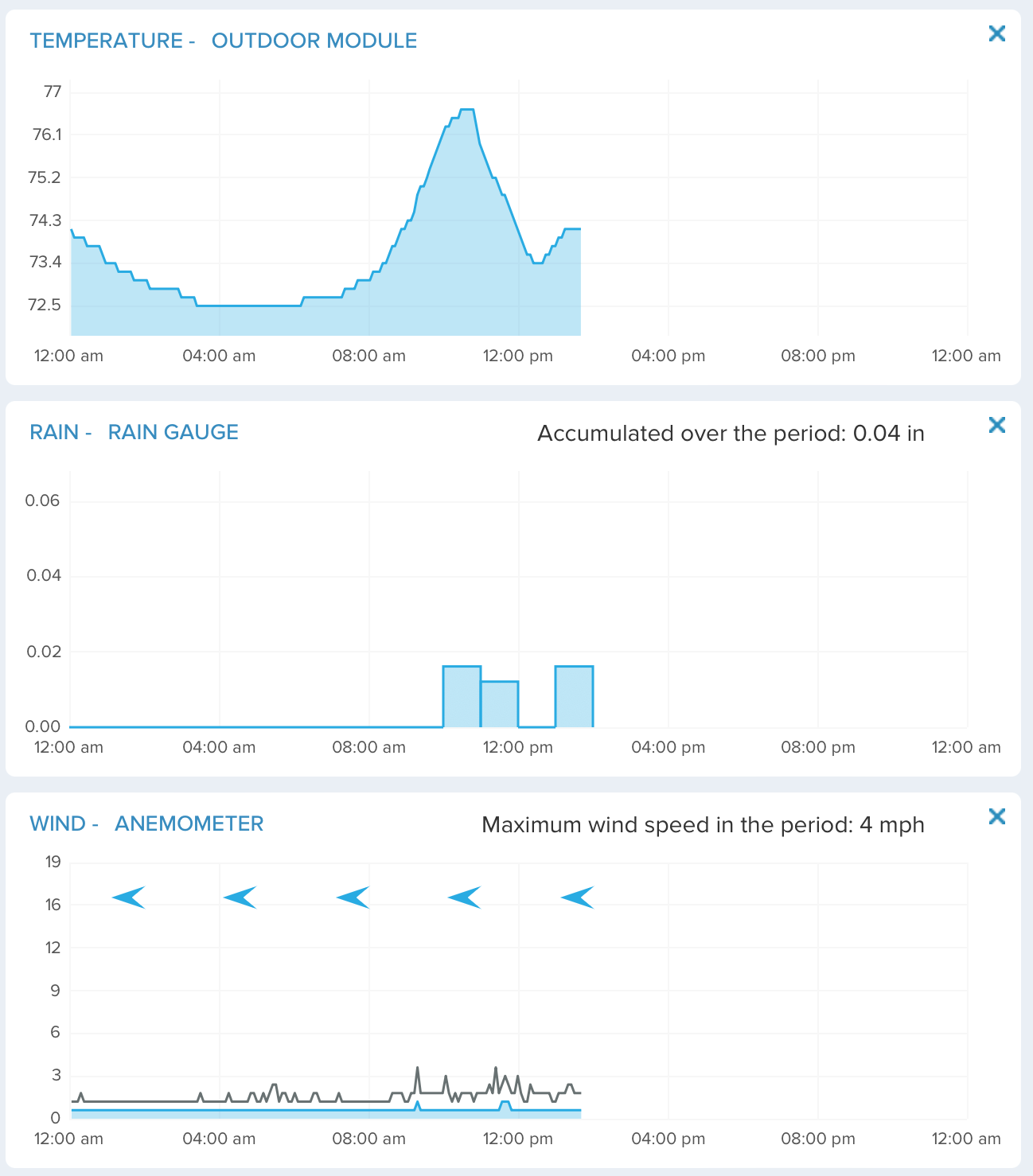

Grafo - This one is still in progress. I use Zabbix as my home server/network monitoring tool. I wanted an app that used the API to show you data on your mobile devices. It is coming along and I’ll probably try to release it in the coming weeks.

Random Things

-

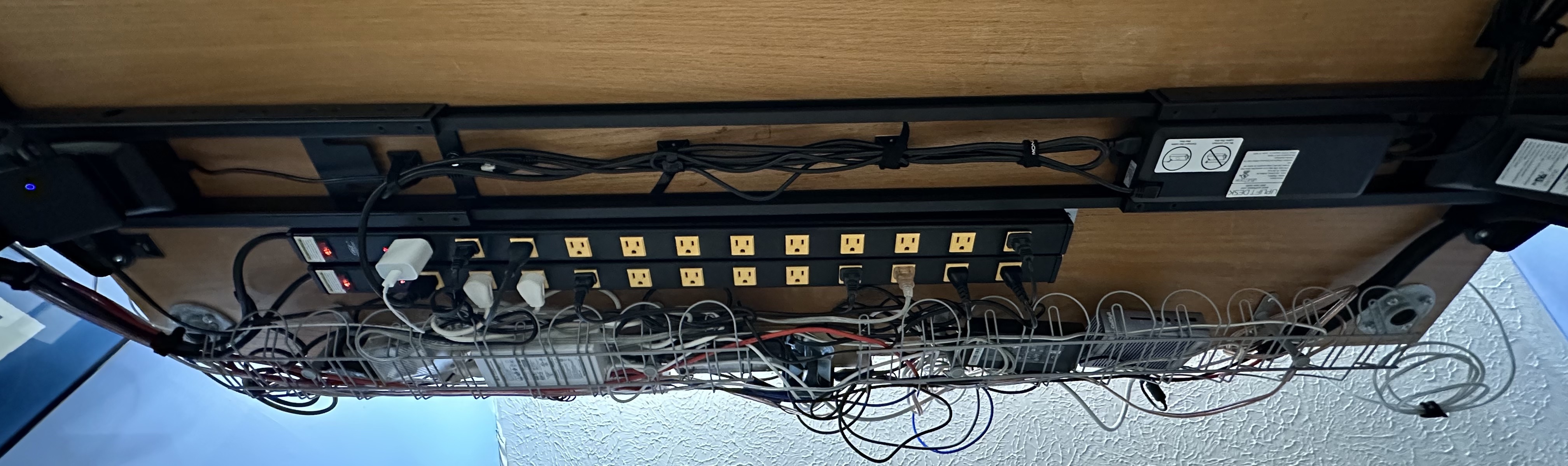

iot_monitor - I run all my IOT devices on a highly restricted segmented VLAN with really restrictive firewall rules. Gathering the data for this used to be an exercise of sitting with

tcpdumpand trying to capture all the data I needed.iot_monitoris a small tool I can use to watch the network and it captures just what I need for my firewall rules. Is it useful for anyone else? No idea. -

today_overview - I have a local LLM server running that I use for more private data and API things. This program is able to collect a bunch of iCal feeds, extract today’s events, then forward them on to your local LLM to give you a summary of your day. I have this emailed to me every morning so I can get a quick sense of what my day will be like.

-

A bunch of ESPHome device extensions. Soil moisture sensor 1 and Soil moisture sensor 2. I’ve been playing with building my own monitoring hardware to go with Home Assistant and sometimes it isn’t supported directly.

Tons of shell scripts

-

A shell script to take an ebook file, convert it to the formats I need and import it into my Calibre library without having to use the Calibre UI which is…not pretty.

-

A shell script to automate backing up various docker volumes because there was no way I was going to do it by hand.

-

A whole bunch more I can’t remember right now.

And if I am being honest, I wouldn’t have done any of this if I didn’t have access to the newer LLM based coding assistants. I don’t know what this means for the profession as a whole, but I can say it has made me more productive for fun little projects.